-

Notifications

You must be signed in to change notification settings - Fork 107

Shared memory data transfer between Functions Host and Python worker #816

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

cc @goiri |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This PR is well documented and great work!

Ping @vrdmr as you may also want to check.

57ebbb9 to

e59c6fe

Compare

…fore passing to the function

|

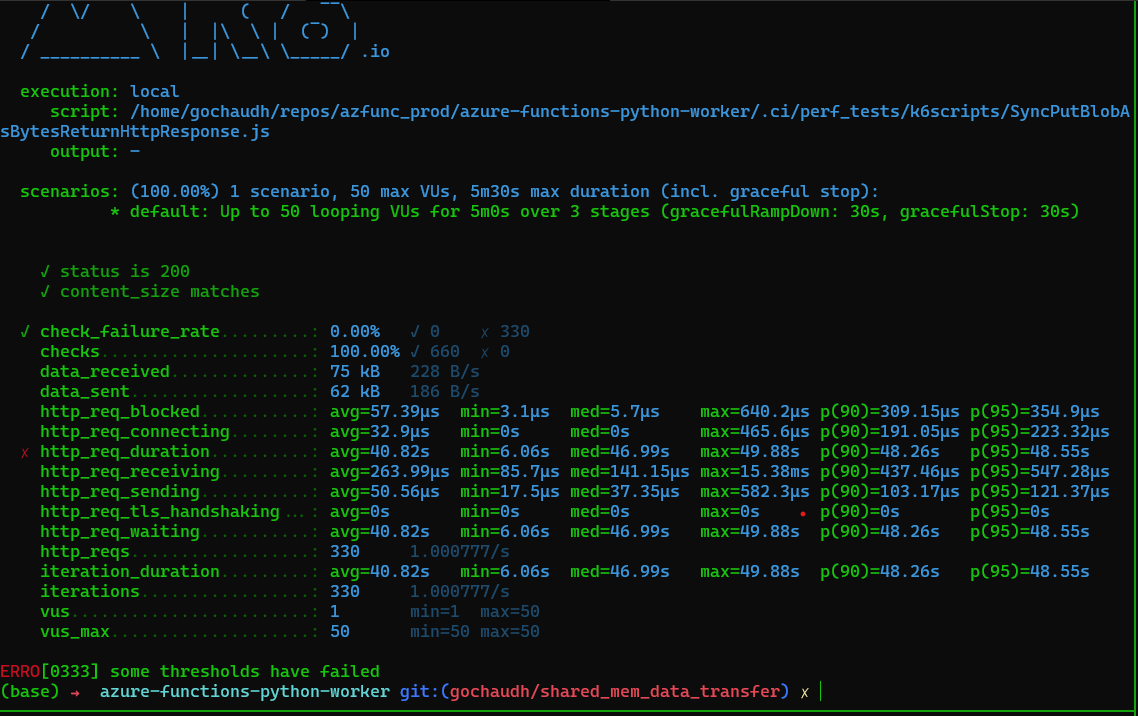

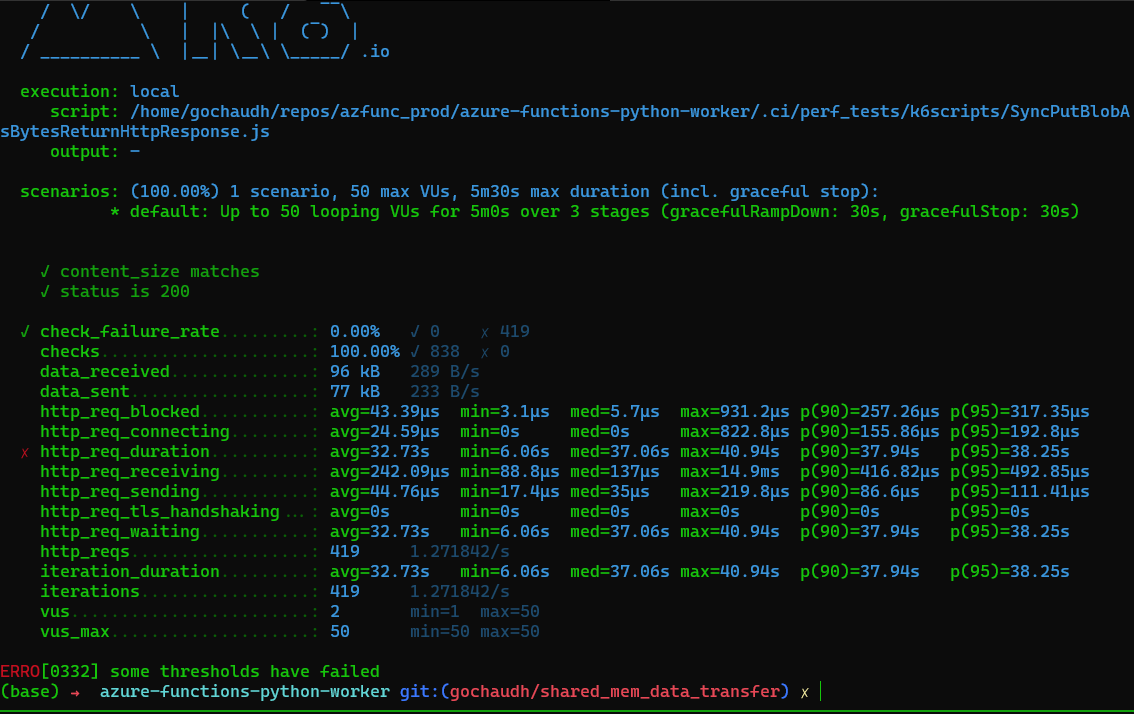

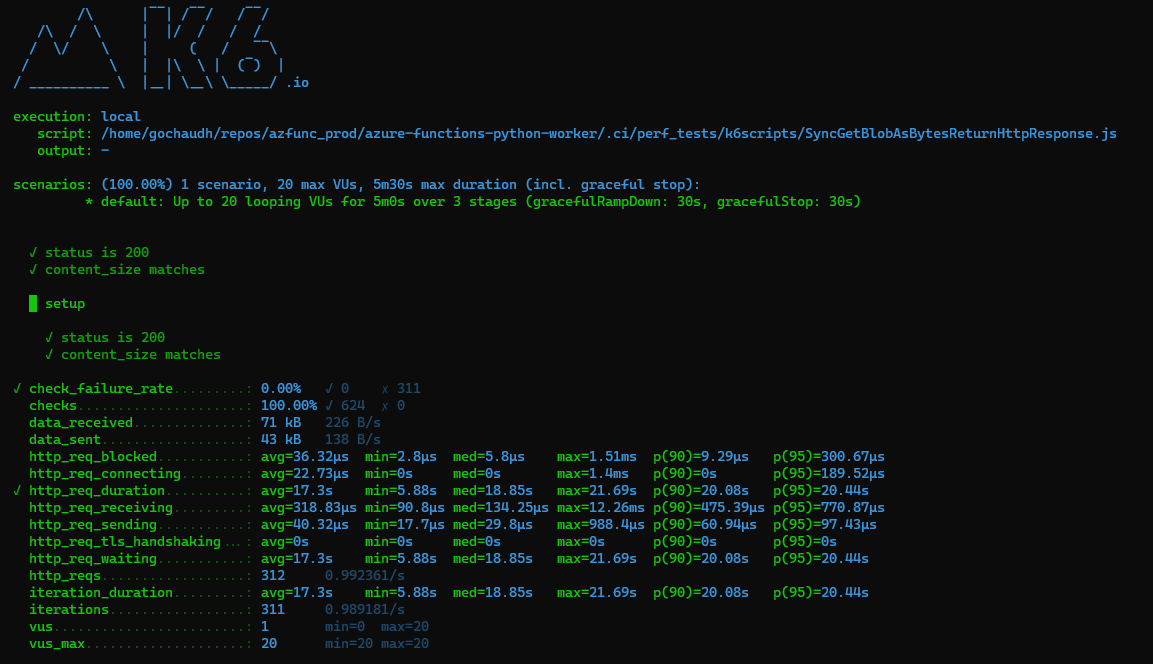

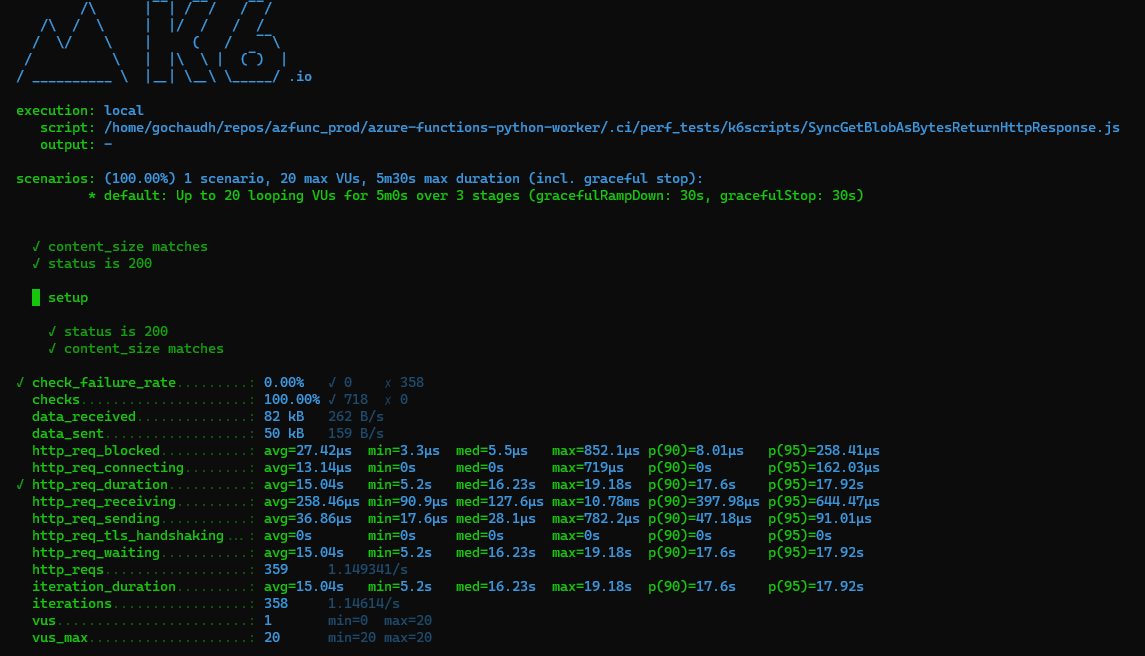

@vrdmr @goiri @Hazhzeng Results: ~21% reduction in median and P95 time for writes of 256MB. We can do a deeper analysis to get some more data points with different sizes. Similarly, will do it for reads. |

azure_functions_worker/bindings/shared_memory_data_transfer/file_accessor_unix.py

Show resolved

Hide resolved

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The second round of comments.

azure_functions_worker/bindings/shared_memory_data_transfer/file_accessor_unix.py

Outdated

Show resolved

Hide resolved

azure_functions_worker/bindings/shared_memory_data_transfer/file_accessor_unix.py

Show resolved

Hide resolved

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Description

Fixes Azure/azure-functions-host#6791

Moved from this PR.

PR information

Quality of Code and Contribution Guidelines